June 07, 2007

Project

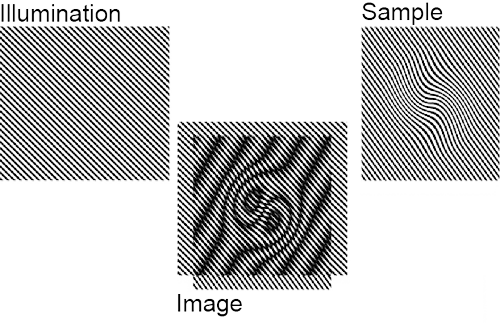

Fluorescence microscopy is a popular and important imaging technique that has pretty much conquered the world of experimental biology. Among its many virtues is the fact that it is fairly non-destructive, meaning it can be used to view live samples in approximately physiological conditions. All kinds of sophisticated variations have been developed around it, but because it uses visible light to make the image it eventually runs up against a pretty hard constraint on just how much detail can be made out, known as the diffraction limit. Once you get down to a few hundred nanometres or so, things pretty much fuzz out and you just can't make out what's going on. This is pretty frustrating, because there's a lot happening down at that level and beyond, some of which is pretty important. Obviously there's no real bound to how much more detail biologists would like to have -- in the same way that you never have enough bandwidth or RAM1 or, you know, days left in your life -- but even just a bit more would be an improvement for a whole range of interesting cellular structures and behaviour. And while there are other imaging processes, such as electron microscopy and various scanning probe methods, that are capable of squinting down to those scales, most of them have to be done in destructive, non-physiological conditions; and/or they're surface bound, unable to see anything going on inside the cell in the way optical fluorescence microscopy can. So, ideally, we would like to be able to extend the resolution of the latter beyond that pesky diffraction limit. As it turns out, there are some ways to do just that, and the one at hand is called structured illumination microscopy -- because it works by imposing a structure on the light you shine on the subject. To understand how it works, we need to take a very quick detour into the frequency domain. We generally think of images in spatial terms: this area over here is dark, that one over there is stripy, whatever. However, it is also possible to think of them as the superposition of a lot of regular repeating patterns or waves. It may not be immediately obvious why you would want to do so, but it turns out to be very useful in all kinds of circumstances.2 So for any image in the spatial domain, defined in terms of positions, there is a corresponding image in the frequency domain, defined in terms of waves. The two representations are theoretically equivalent -- the spaces are reciprocal -- and you can switch back and forth between them with a certain amount of computational effort (and loss of precision). Small details in the spatial domain -- which are what we want our microscope to resolve -- correspond to high frequencies. What the diffraction limit in effect means is that there's only a certain region of the frequency domain that a beam of light can convey. Anything outside that region gets lost. If we want to capture more detail -- which lives outside that observable region -- we need somehow to smuggle it back in via the medium of coarse, low-frequency, observable features. The vehicle for doing so in structured illumination is moiré fringes. These patterns, which occur often enough in everyday life to be familiar -- think of net curtains or chain-link fences or window screens and their shadows -- result from the overlap of repeating features with different frequencies. As should be evident from the image above, the frequency of the joint pattern is lower than that of the contributing patterns, and thus can remain comfortably within the observable range even for at least some details inaccessible directly. In fact, by imposing a frequency close to the resolution limit on the illumination, we can make available spatial frequencies in the target up to roughly twice what would usually be resolvable.3 That is, we can double the spatial resolution.

Indeed, by exploiting non-linearities in the optical response, even higher resolutions are possible. But, of course, there's a problem.

Reconstructing the higher resolution image is computationally intensive; that's unfortunate, but not prohibitive. But, much more seriously, more detail requires more contributory frames. And this is a really big problem because capturing each frame takes time. If you're dealing with living biological specimens -- which, after all, was our main argument in favour of optical fluorescence -- they're most likely going to move. And if they do, the whole process falls down.

You see, the reconstruction depends on the information you want to recover remaining the same (within some pretty small tolerance) from one frame to the next. If it isn't, when you integrate across the frames what you reconstruct will be, basically, garbage.

One way to improve the situation is to speed up the frame capture. In most current implementations -- and there are actually a couple of commercially available microscopes that support this technique, though as far as I can tell only for one dimensional depth sectioning rather than 2D lateral resolution -- the frame capture is painfully slow, mostly because of the way in which the light pattern is created and positioned. Basically, a big chunk of glass is physically moved about in the illumination pathway, a tremendously cumbersome process. Our proposal -- and at the moment it is nothing but pie in the sky -- is to replace this whole mess with a computer-controlled LCD setup.

And that's where I come in.

While on the face of it a purely electronic solution should be much faster and more versatile, opening up all sorts of new possibilities that the current optics don't support, it is far from clear that it is even possible to generate the necessary illumination patterns via an LCD with sufficient consistency and precision as to be able to integrate them convincingly. LCDs have their own set of interesting optical properties, and of course tend to come in neatly rectangular pixel arrays, which don't correspond to the angled line gratings required for lateral resolution recovery.

So, what I'm going to be doing for the next 12 weeks or so, is trying to model all this to determine whether the idea is worth pursuing, and if so lay down some basic proposals for how such a system should work, what its parameters might be, and just what kind of computation will be needed to get a decent image out of it.

Needless to say, I know nothing about any of this. Let's call it a learning experience...

These patterns, which occur often enough in everyday life to be familiar -- think of net curtains or chain-link fences or window screens and their shadows -- result from the overlap of repeating features with different frequencies. As should be evident from the image above, the frequency of the joint pattern is lower than that of the contributing patterns, and thus can remain comfortably within the observable range even for at least some details inaccessible directly. In fact, by imposing a frequency close to the resolution limit on the illumination, we can make available spatial frequencies in the target up to roughly twice what would usually be resolvable.3 That is, we can double the spatial resolution.

Indeed, by exploiting non-linearities in the optical response, even higher resolutions are possible. But, of course, there's a problem.

Reconstructing the higher resolution image is computationally intensive; that's unfortunate, but not prohibitive. But, much more seriously, more detail requires more contributory frames. And this is a really big problem because capturing each frame takes time. If you're dealing with living biological specimens -- which, after all, was our main argument in favour of optical fluorescence -- they're most likely going to move. And if they do, the whole process falls down.

You see, the reconstruction depends on the information you want to recover remaining the same (within some pretty small tolerance) from one frame to the next. If it isn't, when you integrate across the frames what you reconstruct will be, basically, garbage.

One way to improve the situation is to speed up the frame capture. In most current implementations -- and there are actually a couple of commercially available microscopes that support this technique, though as far as I can tell only for one dimensional depth sectioning rather than 2D lateral resolution -- the frame capture is painfully slow, mostly because of the way in which the light pattern is created and positioned. Basically, a big chunk of glass is physically moved about in the illumination pathway, a tremendously cumbersome process. Our proposal -- and at the moment it is nothing but pie in the sky -- is to replace this whole mess with a computer-controlled LCD setup.

And that's where I come in.

While on the face of it a purely electronic solution should be much faster and more versatile, opening up all sorts of new possibilities that the current optics don't support, it is far from clear that it is even possible to generate the necessary illumination patterns via an LCD with sufficient consistency and precision as to be able to integrate them convincingly. LCDs have their own set of interesting optical properties, and of course tend to come in neatly rectangular pixel arrays, which don't correspond to the angled line gratings required for lateral resolution recovery.

So, what I'm going to be doing for the next 12 weeks or so, is trying to model all this to determine whether the idea is worth pursuing, and if so lay down some basic proposals for how such a system should work, what its parameters might be, and just what kind of computation will be needed to get a decent image out of it.

Needless to say, I know nothing about any of this. Let's call it a learning experience...

1 Although nobody could ever need more than 640k, obviously.

2 Several popular compression techniques for images, sound and videos are based on this, for example.

3 If we could project arbitrarily fine patterns onto the sample, it would probably be possible -- although extremely laborious -- to reconstruct arbitrarily-high levels of detail. But we can't: the projected pattern is also constrained by the diffraction limit.

Posted by matt at June 7, 2007 10:42 PM

Comments

Something to say? Click here.